Deployment previews on Kubernetes

Deployment previews - made popular by platforms like Vercel and Netlify - are not commonplace in microservice architectures. At Blueground, we brought deployment previews to K8s using ArgoCD. Well, it turned out to be so good, it is worth sharing.

Don’t you really love those deployment previews web platforms like Netlify and Vercel offer for statically generated sites? We certainly do! They promote fast feedback loops in a frictionless, dependable way. In this post, we’ll talk about how we took this idea and ported it over to our Kubernetes hosted services. It’s easier than you might expect!

The backstory

It was more than a year ago when the static pre-production environments started feeling a bit cumbersome. Even with 1–2 fully fledged, dedicated environments per team, engineers started contesting access to them. Discussions around who has deployed what and on which environment started trending on Slack. When people started lending their dedicated environments to their best friends across teams, we knew we had a problem which had to be dealt with.

One way to deal with it would be to simply spin up more static pre-production environments. But there’s a reason pre-production environments on k8s are a scarce resource. More environments require more k8s nodes and those come at a cost — a cloud cost that is. By now, everyone should know that one thing you DON’T want to do is structure your cloud costs poorly for scale. What you may want to do is be mindful of the ecological footprint of your infra though. So we looked for another way, and the concept of rolling out our own flavour of deployment previews on Kubernetes using GitOps started looking more and more appealing by the day. Better now than later they say, so we put our minds to it and set a soft deadline — as per usual at Blueground. And we met it. From concept to design and from design to implementation, it took about a month. And since you’re reading this post, you guessed right — it turned out pretty well.

Deployment previews had a huge improvement in developer experience plus a measurable impact on:

- Cost/Energy: Preview environments live only for a specific period of time, so it is not required to allocate resources 24/7 but only when needed.

- Flexibility: This is a self-service solution in contrast to the static environments which involve the Platform team and usually come with at least a few hours of lead time.

- Simplified configuration: There is no need to maintain changes in any manifest file, since what we need each time is the latest production images for all services, except for the one being previewed.

- Data Readiness: Once the environment is set up and running, we seed it with the latest de-identified production data in ephemeral databases.

- Reduced Maintenance: Due to the ephemeral nature of the environments, there is a considerable reduction in maintenance effort, which usually burdens platform or development teams.

The implementation

Our infrastructure stack includes Kubernetes, Helm, Terraform, ArgoCD, Jenkins, and Github as our VCS. Having said that, while exploring a handcrafted solution and taking into account the configuration overhead, we realized there was a fair amount of implementation effort. Thankfully, ArgoCD ApplicationSets and, more specifically, the PR Generator feature, came very handy since they saved us a considerable amount of time, which in turn allowed us to focus on the flow orchestration.

Working with ArgoCD ApplicationSets

An ApplicationSet is a Kubernetes CRD handled by ArgoCD ApplicationSet Controller. Its purpose is to automatically generate ArgoCD applications. It’s a common use case, so we assume that an ArgoCD application is a fully fledged environment hosting the whole product. On top of ApplicationSet, ArgoCD offers generators which can generate an ArgoCD application based on various triggers (eg: git file changes, Pull Requests). For the sake of this article, we will mainly focus on the Pull Request Generator that helped us deliver preview environments.

Pull Request Generator

Looking further on the anatomy of an ApplicationSet with the Pull Request generator, we utilized the following example:

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: myapps

spec:

generators:

- pullRequest:

github:

# The GitHub organization or user.

owner: myorg

# The Github repository

repo: myrepository

# Reference to a Secret containing an access token. (optional)

tokenRef:

secretName: github-token

key: token

# Labels is used to filter the PRs that you want to target. (optional)

labels:

- preview

requeueAfterSeconds: 1800

template:

# ...

The above snippet refers to the repo that will be observed for any new Pull Requests. Few points to be addressed here:

- Only the PRs that hold the label “preview” will be matched

- You can use both a Pull and a Push approach regarding Github.

- Pull: requeueAfterSeconds refers to a pull approach and specifically to polling intervals from Argocd to Github.

- Push: In order to avoid waiting for the next interval, we decided to adopt a push approach. This requires exposing ArgoCD ApplicationSet controller and then setting a webhook pointing to it, on our Github repositories. This made our lives easier, since we were able to get an immediate event on every new PR opening or change in an existing PR. You can find more details here.

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: myapps

spec:

generators:

- pullRequest:

# ...

template:

metadata:

name: 'pr-{{number}}-{{repo}}'

spec:

source:

repoURL: 'https://github.com/myorg/myinfra.git'

targetRevision: 'argocd/sync-branch'

path: kubernetes/helm-charts/environments/blueground-envs

helm:

parameters:

- name: "myreposervice.tag"

value: "{{head_sha}}"

valueFiles:

- ./values/preview/preview-datastores-values.yaml

- ./values/preview/preview-apps-values.yaml

project: default

destination:

server: https://kubernetes.default.svc

namespace: default

The above snippet demonstrates the template used to generate the ArgoCD application, when a PullRequest is matched. ArgoCD has built-in support for Helm, so the only thing we had to do is to point it to our infra repo, the relevant Helm folder and override at least the image tag of the service being previewed. The process of image building and pushing to ECR (our choice of Docker image registry) is handled by another pipeline and will be described below.

We decided to use the following naming convention for the environments pr-{{number}}-{{repo}} which indicates the originating PR and Github repository accordingly.

As we see above, we used ArgoCD waves for our deployment approach. In this way, we can deploy in an ordered fashion with discrete steps, our databases, seed them with test data and deploy our applications to experience a smoother onboarding.

Image Build and PR comments

We use Kubernetes, which means our deployable artifacts are Docker images. In order to build the Docker image that will be used on our newly provisioned environment, we use Jenkins and a multibranch workflow which builds and pushes Docker images for every application originating from a repo that has been labeled with preview.

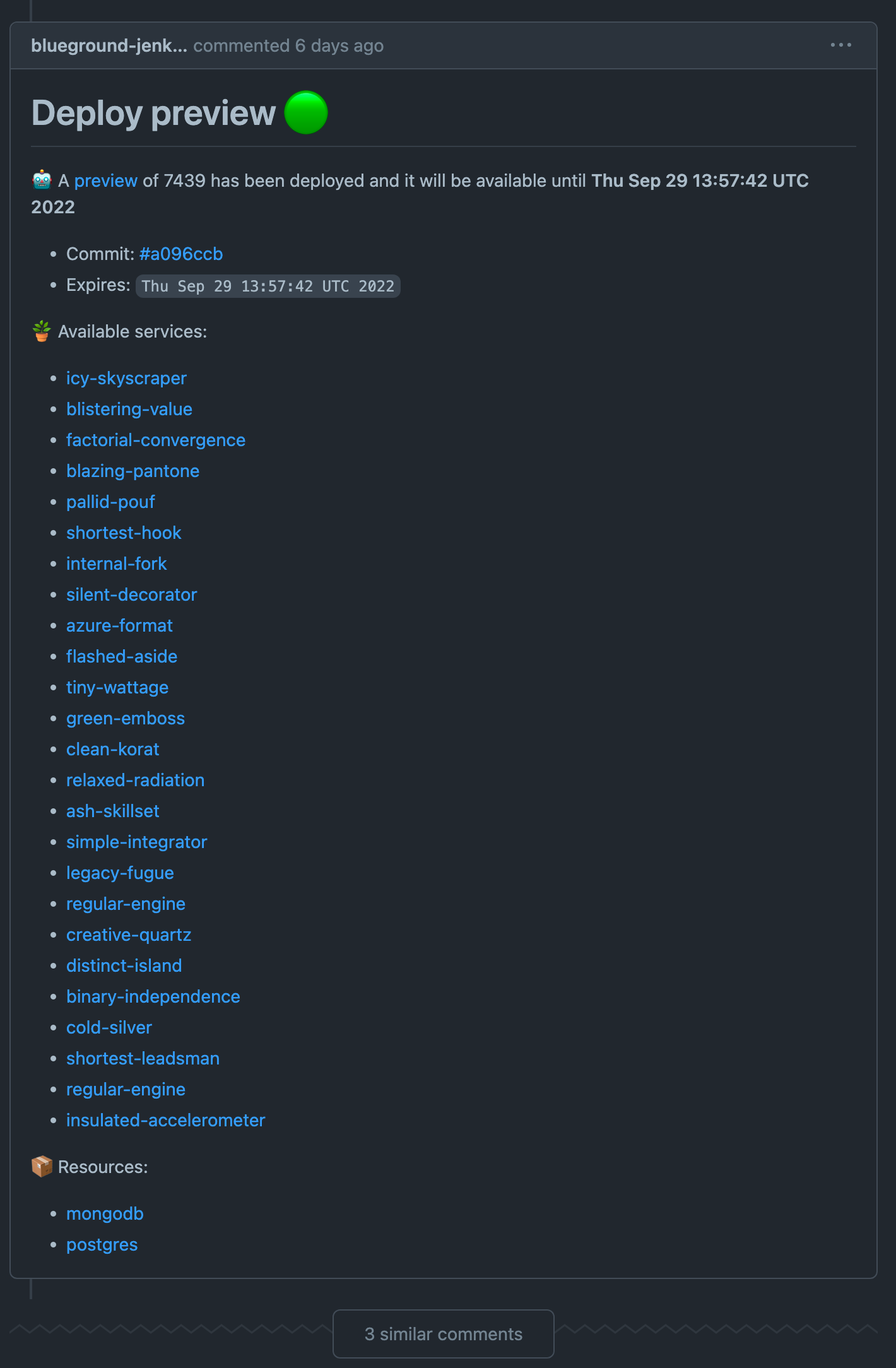

After the image is built and pushed, we wait until the application is healthy, using ArgoCD CLI and then we add a comment on Github, with information regarding the deployment result (services endpoints on success / failure on timeout).

Since we have two flows (environment provisioning and image build), we can end up with some race conditions. A common use case is that the Docker image is not yet ready when the deployment needs it, which will result in the application’s Pod ending in an imagePullBackOff state. Since Kubernetes constantly tries to pull the image, with an exponential backoff retry policy (with 5' max interval), the environment will eventually be self-healed.

Environment Termination

So when should we destroy a deployment preview, scale the cluster in, and save up on costs? Well, the ArgoCD ApplicationSet Controller will destroy the environment once the Pull Request is closed or unlabeled by default. That worked well…but not great. As with everything, we had to define a certain global limit to the number of deployment previews available. And that limit should be automatically enforced. Now, with a limit in place, long running PRs would gradually pile up and hit that limit, leaving no room for the latest PRs. In other words, we needed a more versatile termination strategy that would protect us from cost surges while keeping the deployment previews accessible.

That strategy came to be the following 👇

We terminate a deployment preview when any of the following events occur:

- Its Pull Request is closed

- Its Pull Request loses the “preview” label (someone removes it)

- Its TTL expires (that was set to 48hrs)

- It happens to be the oldest PR when the global limit is reached (implemented using a FIFO queue)

When the ApplicationSet controller decides to deploy a preview environment, it cannot be destroyed unless the PR is closed or its labels stop matching the PR generator constraints. In order to overcome this limitation, we implemented a Jenkins pipeline, which removes the label from the open Pull Requests when TTL or capacity criteria are met. The aforementioned pipeline runs on frequent intervals.

And here is the overall architecture in one picture (click to zoom) 👇

Final thoughts

Deployment previews on k8s is a game changer feature that’s rather easy to implement. Make it play nice with your code hosting platform and your team will absolutely ❤️ it.

Next time, we’ll talk about another team favorite: how we populate our pre-production environments with data!

Stay tuned!